“Garbage In-Garbage Out” is a well-known concept in the machine learning domain, emphasizing that the quality of the training data directly impacts the AI/ML model’s quality. The same principle holds true for image annotations.

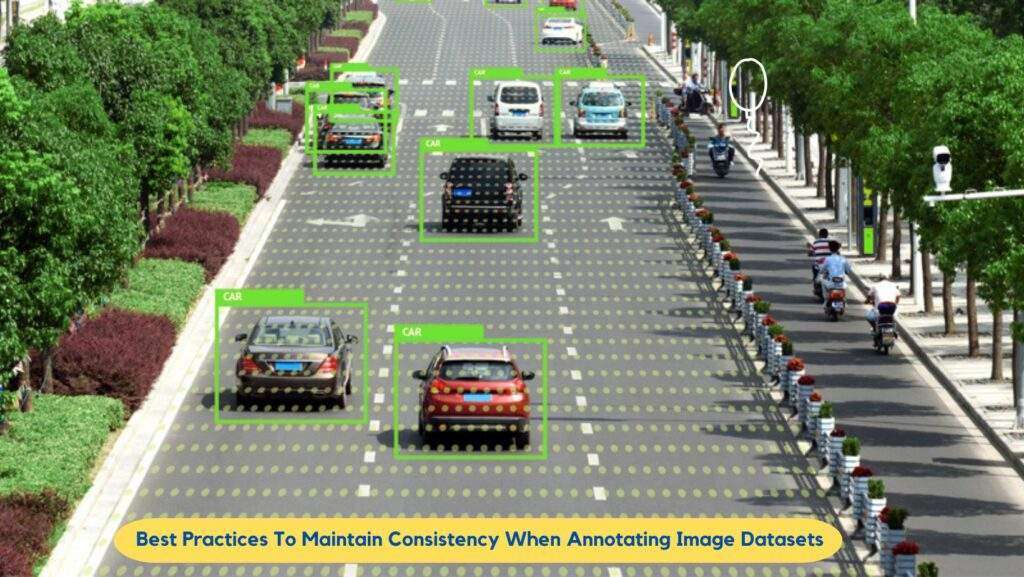

Consider a scenario where you’re using image annotation techniques to prepare a dataset to train an autonomous vehicle’s object recognition system. If you mislabel a motorbike in an image as a bicycle (Garbage In), it can misguide the model’s understanding —- causing — a failure to identify a motorbike correctly, impacting its ability to navigate safely, and posing a threat to passengers, pedestrians, and other vehicles on the road (Garbage out). Such minor inaccuracies in image annotation can have real-world consequences.

To avoid these pitfalls, it’s essential to invest time and effort in meticulous data labeling. This ensures that the quality of your annotations aligns with the high standards required for your machine learning model’s success.

Here are some best practices to maintain accuracy and consistency in image datasets while annotating your images.

Best Image Labeling Practices For Better AI/ML Model Accuracy

Before you start annotating your images, it’s essential to ensure the raw data (images) is clean. Data cleaning process purges low-quality, duplicates, and irrelevant images. Removing these unwanted elements from your dataset is fundamental for image annotation, ensuring accurate AI model training.

Establish clear annotation guidelines

Clear guidelines serve as a structured set of instructions for annotators, outlining precisely what aspects of an image they should annotate. For instance, if you are labeling a cat, a well-defined guideline will specify the particular focus, such as breed, color, or other relevant characteristics, that should be used to annotate the cat. This level of clarity ensures all the cats in the dataset are labeled in a uniform manner, thereby preventing disparities in how data is recorded.

In the absence of such guidelines, there are chances that you might interpret the task differently, potentially resulting in inconsistencies, such as one annotator marking the breed on cat images while another is annotating the images by the cat’s color.

Moreover, the guidelines minimize the likelihood of personal biases or interpretations affecting the annotations.

Label occluded objects

In image annotation, occluded objects refer to elements that are partially blocked out of view in an image due to obstructions. This occurrence is common in real-world scenarios, where objects of interest might not always be entirely visible.

A common error in annotating occluded objects is drawing partial bounding boxes around the visible portion of the object. This practice can lead to inaccuracies and hinder the model’s ability to understand the complete object.

To maintain consistency and accuracy, it’s essential to label occluded objects as if they were fully visible. This approach ensures that the dataset contains comprehensive information about the object, even when it’s partially obstructed.

In some cases, multiple objects of interest may appear occluded in a single image. When this happens, it’s acceptable for bounding boxes to overlap. As long as each object is accurately labeled, the overlapping boxes do not pose a problem.

Use annotation tools with built-in validation

When annotating image datasets, consider using annotation tools that come with built-in validation features. These tools automatically check annotations for common errors and inconsistencies, such as overlapping bounding boxes or improperly closed polygons. Some best tools to consider are — Labelbox, SuperAnnotate, and LabelImg.

Remember to evaluate these tools based on your specific project requirements, budget, and ease of integration into your workflow. Additionally, the choice of tool should align with the level of validation required to maintain consistency in your image datasets.

Maintain consistent labeling styles & metadata

Consistency extends to the way objects are labeled in images. Ensure that the labeling style, such as color, line thickness, and text placement, remains uniform throughout the dataset. A consistent labeling style makes it easier for the model to learn and generalize from the data.

In addition to object annotations, metadata associated with each image should also be consistent. This includes information such as image resolution, file format, camera settings, and timestamps. Consistent metadata can be invaluable when analyzing and processing the dataset for training and evaluation.

Address ambiguities and edge cases

Image annotation is not always simple, and annotators may encounter ambiguous or edge cases. Document these cases and seek guidance when uncertain. Establish clear procedures for handling such situations, which might include consulting domain experts or referring to a defined hierarchy of decision-making.

Conduct inter-annotator agreement (IAA) analysis

IAA, or Inter-Annotator Agreement, is a statistical measure used to quantify the degree of agreement or consistency among multiple human annotators when they independently annotate or label the same data, such as images. It’s a way to assess the reliability of the annotations provided by different people. IAA analysis helps quantify the consistency of annotations. Regular IAA analysis can highlight areas where consistency needs improvement. Common metrics for IAA include Cohen’s Kappa, Jaccard Index, and Intersection over Union (IoU).

Create a feedback loop

To maintain consistency, it’s crucial to establish a feedback loop within your annotation team and project stakeholders. This loop is more than just a means of maintaining consistency; it’s a collaborative mechanism that fosters a sense of teamwork and shared purpose among annotators and project stakeholders. It ensures that everyone is working towards a common goal: the creation of a high-quality, consistent, and reliable annotated image dataset.

To implement this feedback loop, keep —

- Open communication channels: Ensure everyone involved can easily communicate via email, messaging, or team meetings.

- Encourage questions: Make it clear that asking questions is encouraged, especially for challenging cases.

- Scheduled team meetings: Hold regular meetings to share experiences and solve common challenges.

- Document feedback: Keep records of feedback and resolutions for future reference.

- Continuous improvement: Use the feedback loop to evolve and adapt as the project progresses.

Bonus tip: Ensure Effective AI/ML Model Training Cost-effectively with the Right Image Annotation Partner

Preparing a training dataset to train an AI/ML model is a continuous and iterative task that usually takes time and multiple cycles of annotation. You can either set up a team in-house for this, crowdsource, or outsource. Crowdsourcing varies wildly in annotator skills and offers limited oversight. This can result in inconsistent, error-prone labeling that leads to poor model performance. On the other hand, in-house teams may produce higher quality annotations but scale poorly and require significant management overhead.

So, the better solution is partnering with a professional image annotation company, given that you look for one with highly-skilled annotators, robust training protocols, and multi-stage QA checks. Collaborating with an image annotation service provider allows you to tap into their extensive expertise and resources, enabling your AI project to thrive while keeping your budget in check.